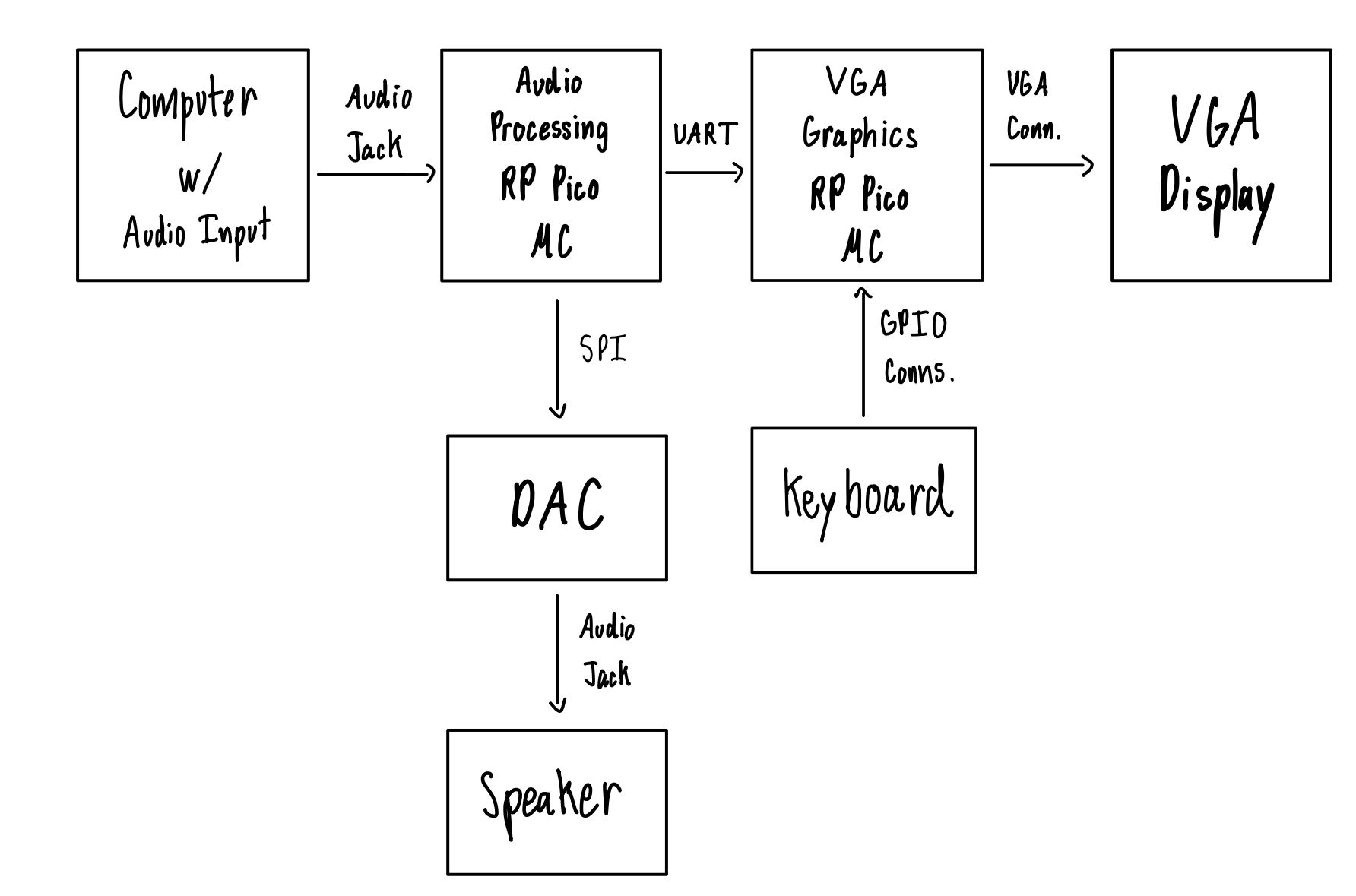

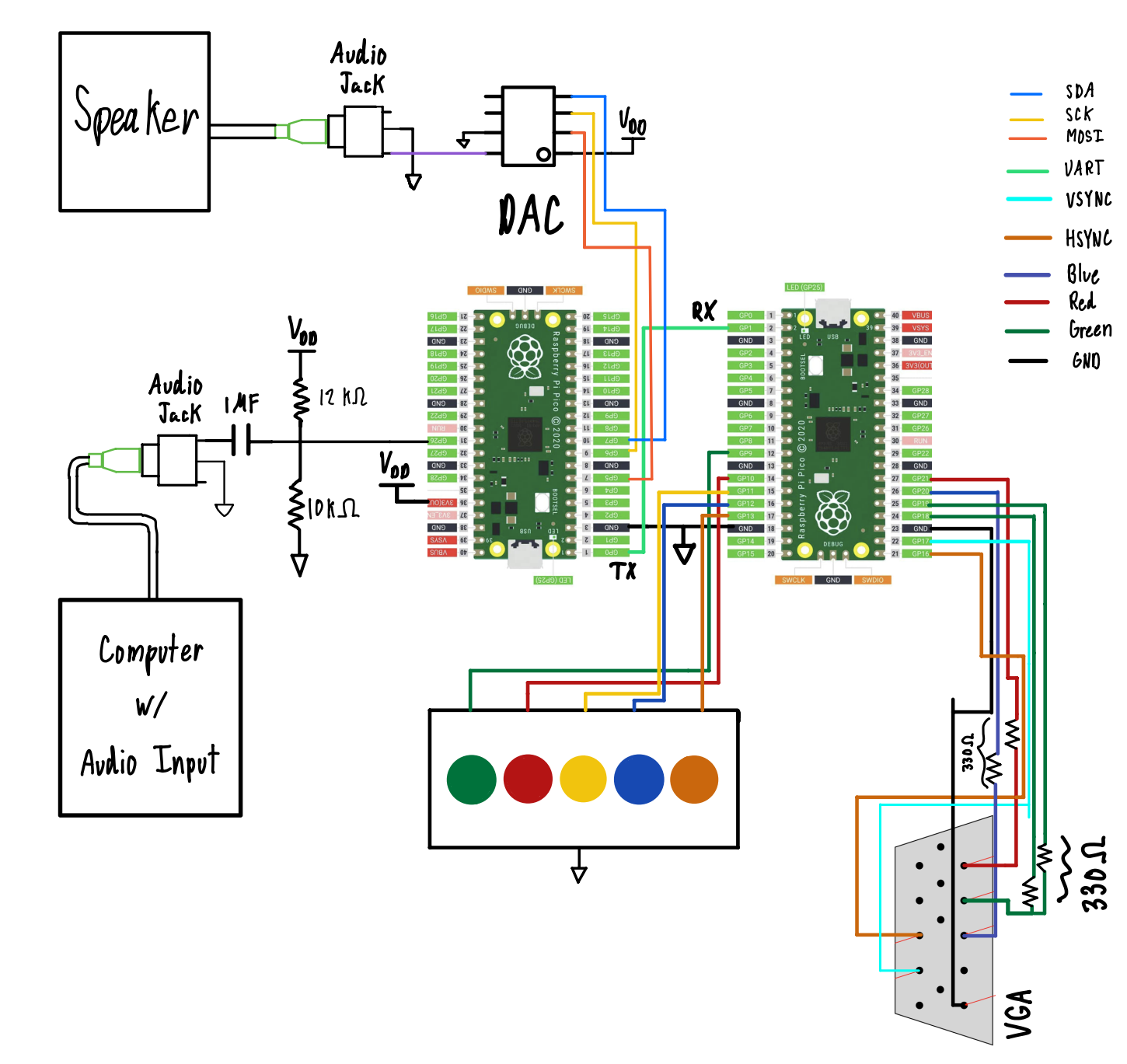

For this hardware description we will walk through each of the system subsections sequentially.

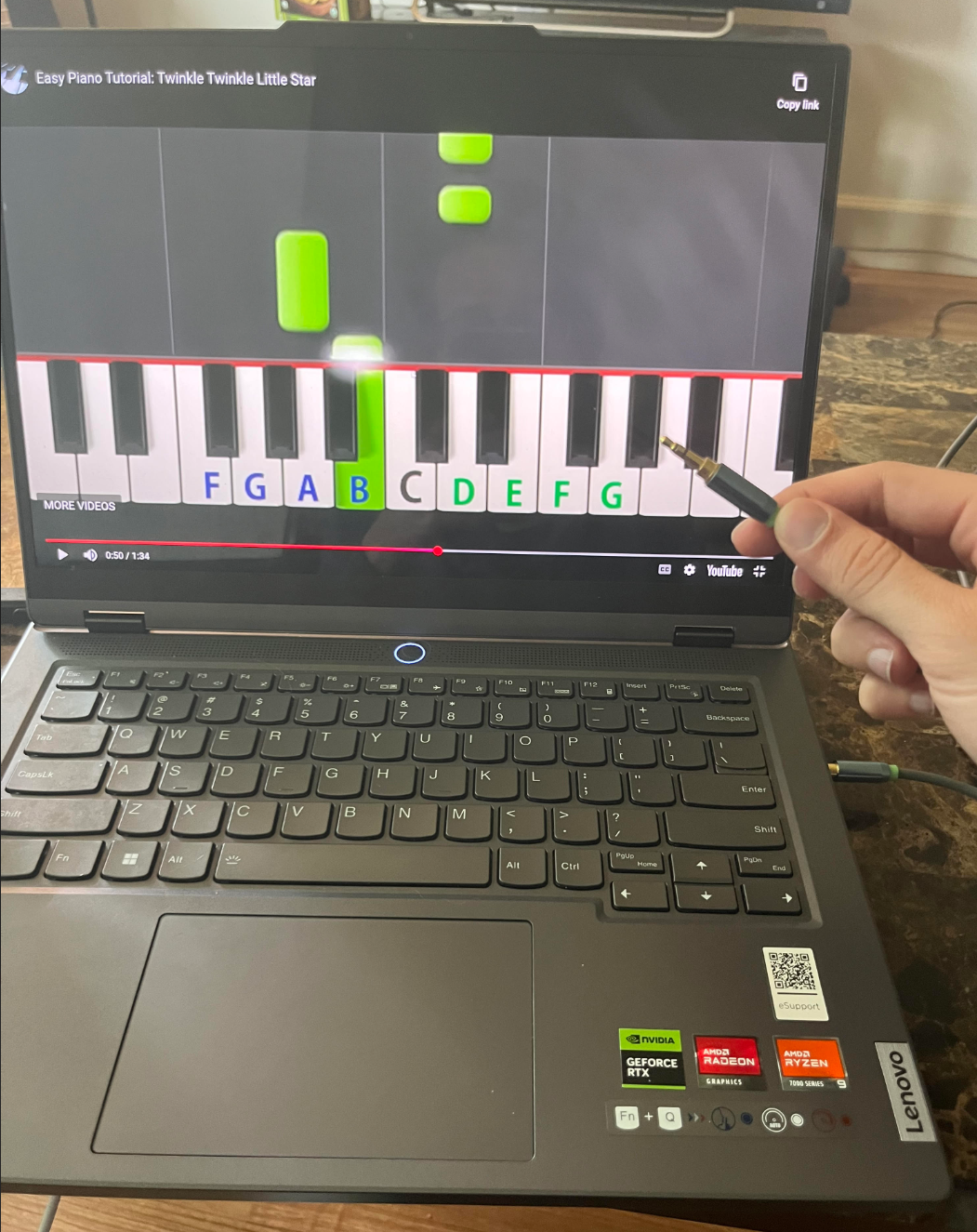

Computer audio input into Audio Processing Pico ADC

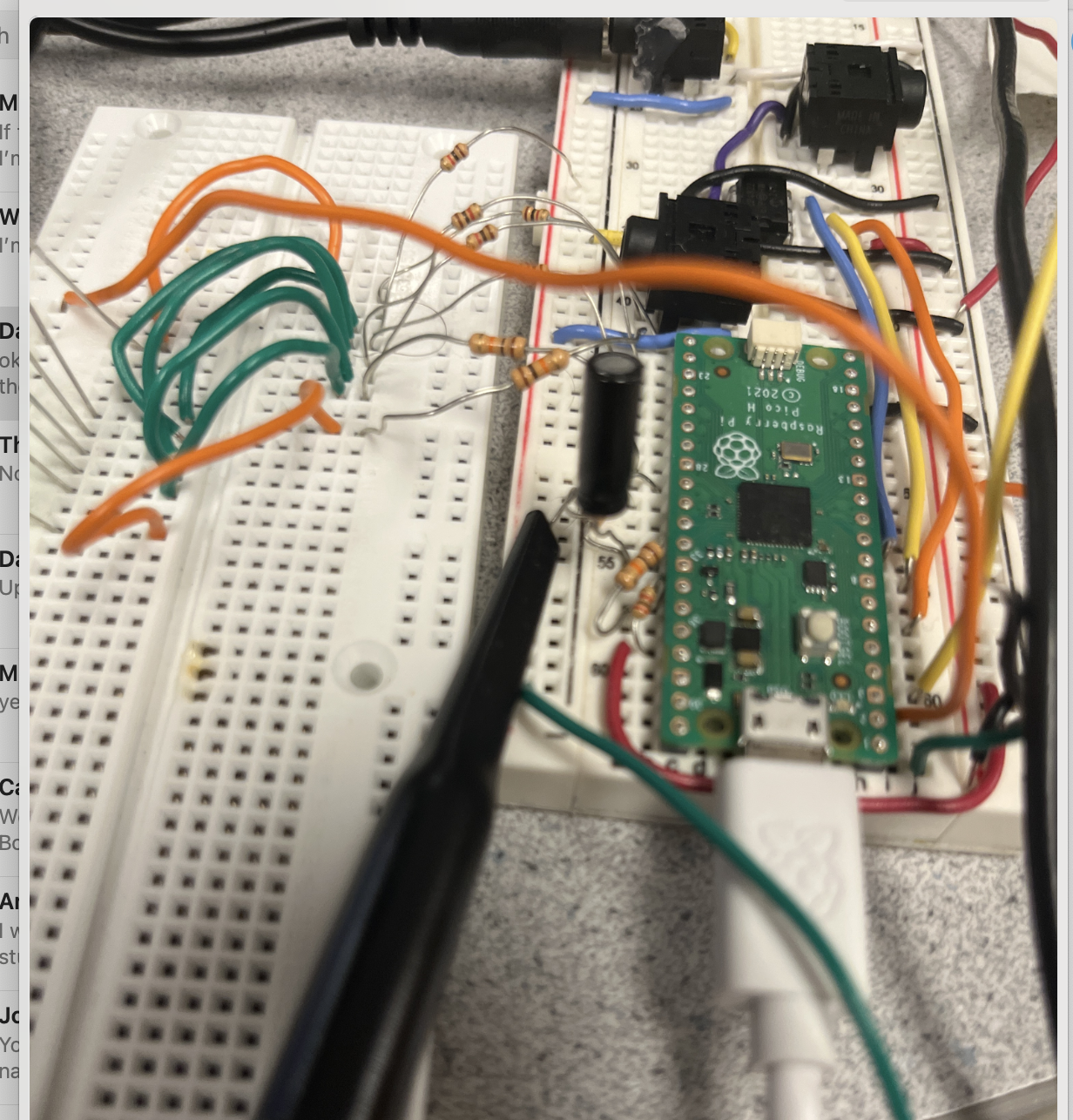

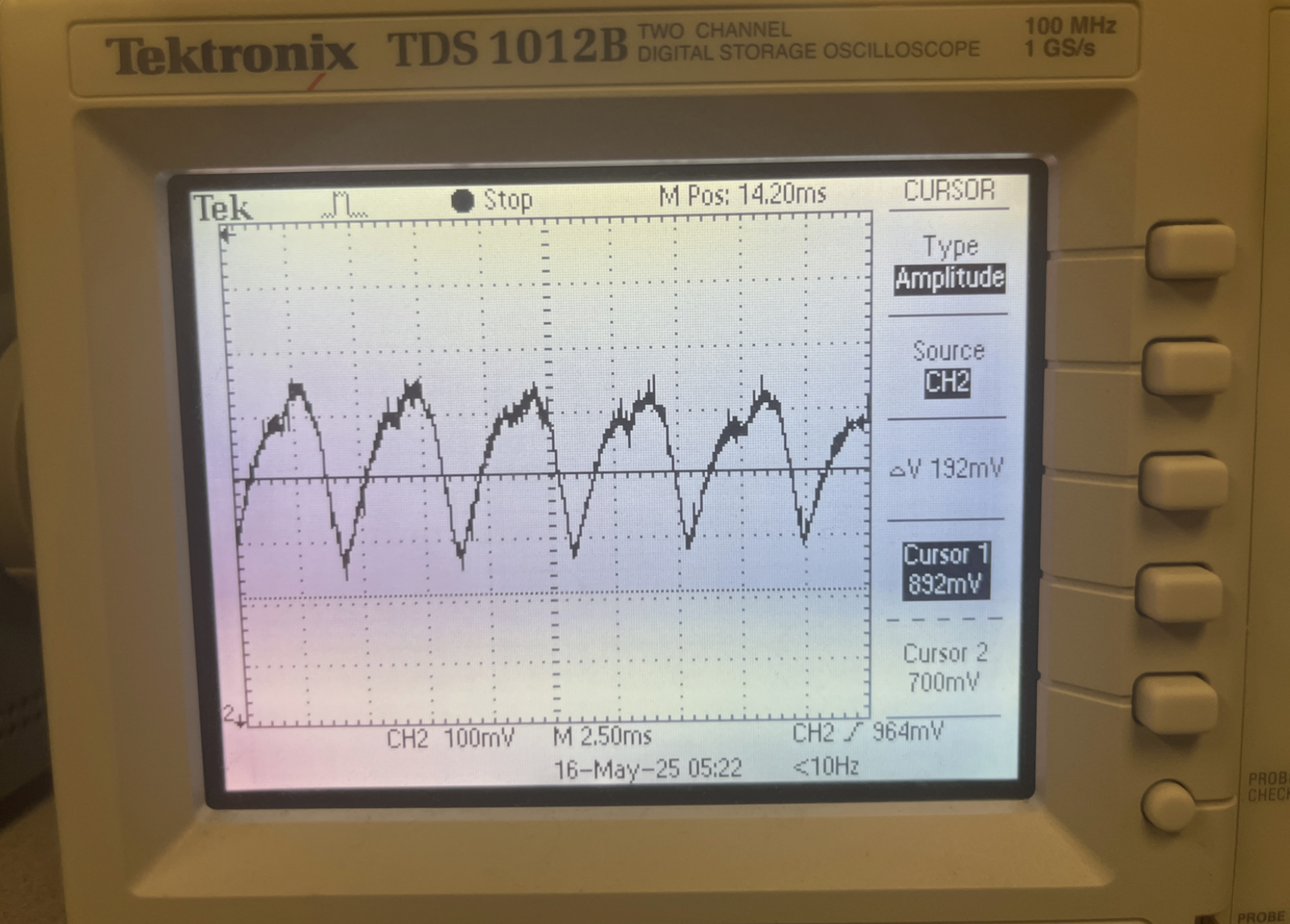

The song audio is initially sent out from the computer via an audio cable hooked up to an audio jack. The song audio initially has a mean value of zero volts with the signal oscillating between both positive and negative values.

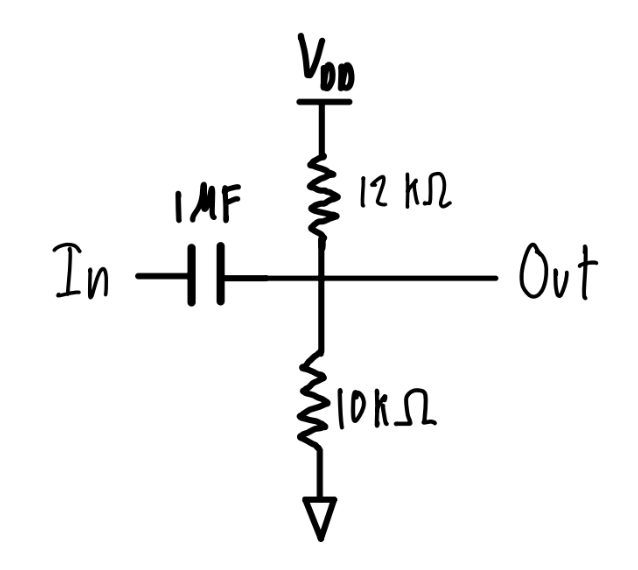

We then implement a sub-circuit containing a bypass capacitor along with a resistor divider to filter out the DC content of the signal and effectively take the AC content to then shift it up to a prescribed mean value determined by the voltage divider.

The mean value is calculated as follows based on the fact that VDD is 3.3V:

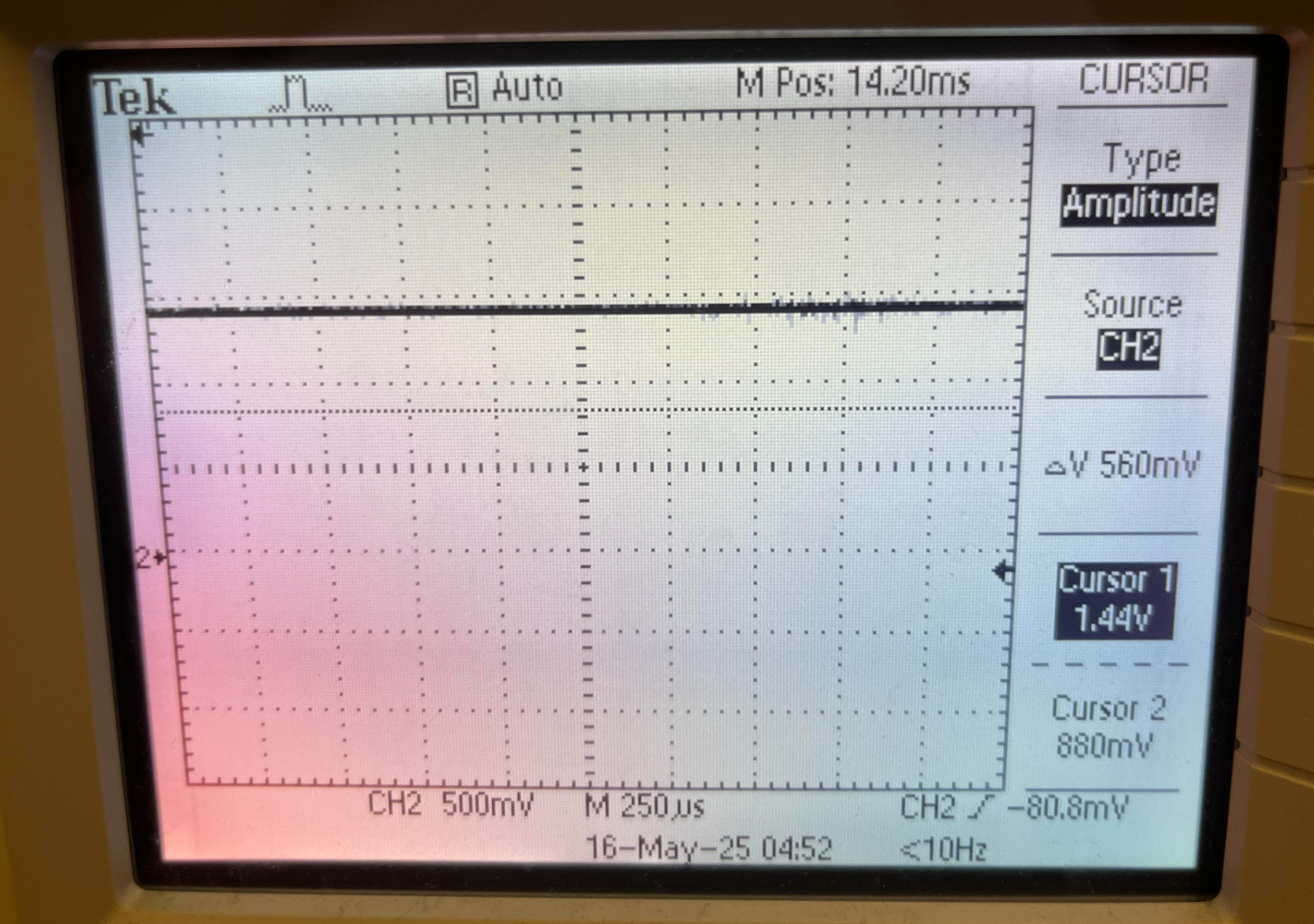

Mean value = (10 kOhms)/ (12 kOhms + 10 kOhms)*(3.3V) = 1.5V

*In practice the mean value is 1.44V

By shifting up the mean value to this level we ensure that the audio signal is contained within the 0V to 3.3V range required for ADC input value reading without the fear of clipping.

Audio Processing Pico SPI to DAC

The Audio processing Pico hooks up to the DAC via SPI communication. Since communication only occurs from the pico to the DAC, and not vice versa, only MOSI is implemented. The connections also include the standard VDD, GND, SDA, and SCK connections. The DAC then outputs the time delayed signal to the speaker via an audio jack/cable connection.

Audio Processing Pico UART TX to VGA Graphics Pico UART RX

In order for the VGA Graphics Pico to determine when a new note should show up on the screen, and which note should show up on the screen, it has to receive this information from the Audio Processing Pico. The information flows between the two of them using a UART connection. Given the low frequency of the information flow (about 1 Hz) and the fact that UART is easy to implement for these purposes it seemed like a good choice for communication. In this case the UART connection only consists of a ground line and a single TX/RX line since the Audio Processing Pico sends information to the VGA Graphics Pico but not vice versa.

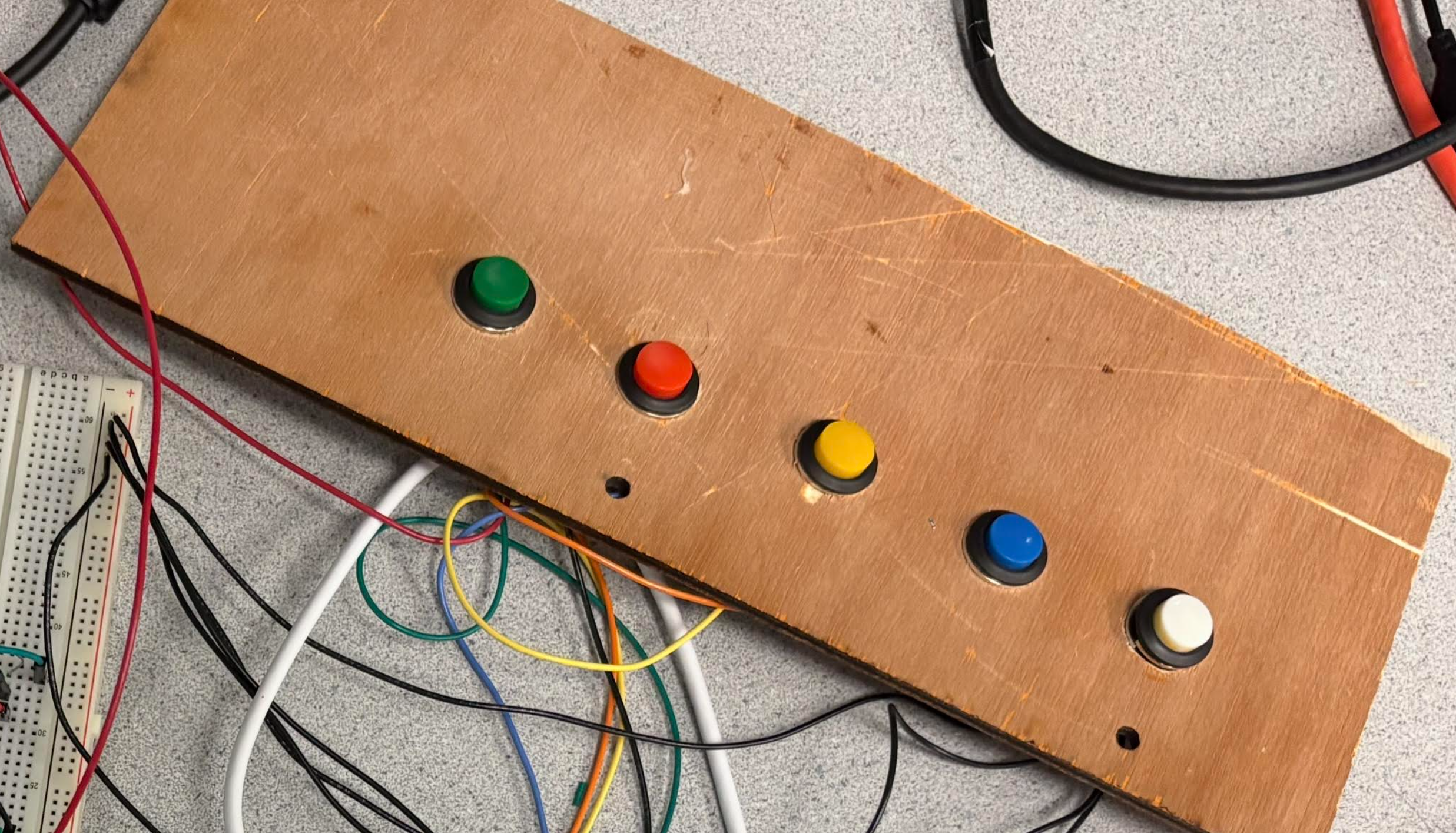

Keyboard to VGA Graphics Pico

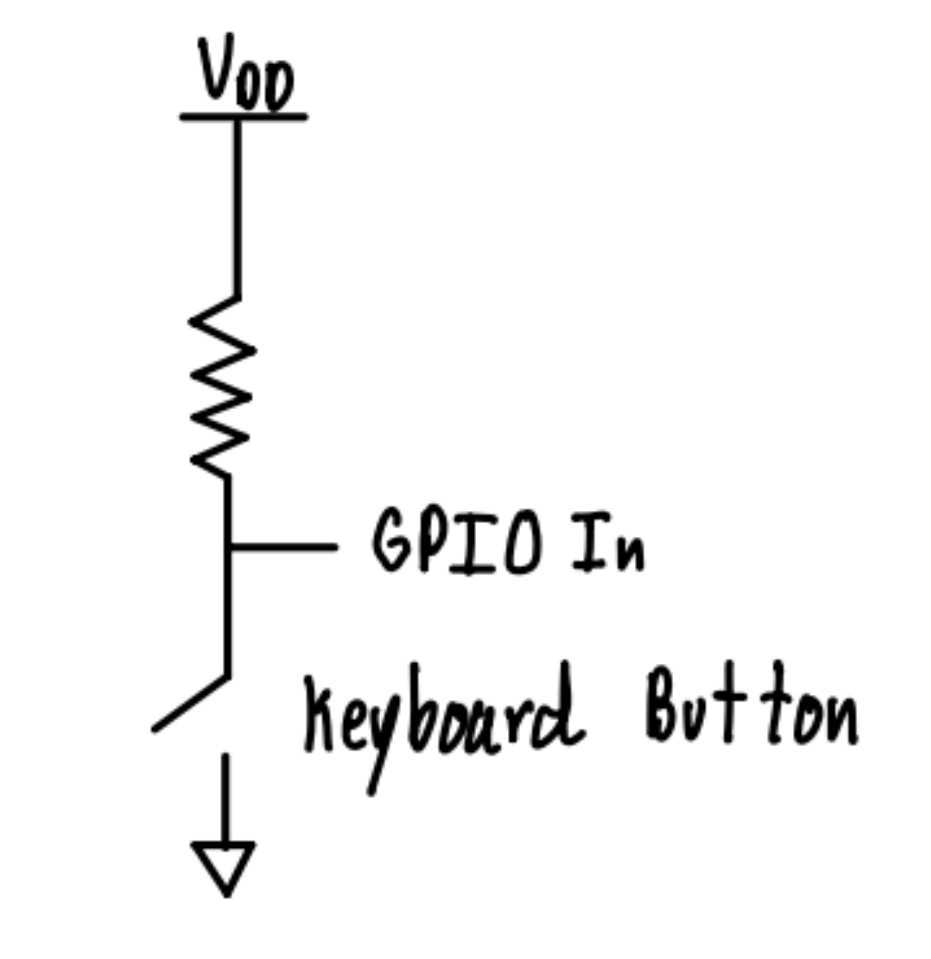

The VGA Graphics Pico is able to receive user input via the five buttons on the keyboard that act as pull down switches. The GPIOs in the Pico are pulled high by default, by means of an internal pull-up resistor. The GPIOs are also connected to the five buttons on the keyboard that are each connected to ground on their opposite pin connection. When the buttons are pressed they are pulled low. This allows the system to read when a particular button is pressed by the user.

Keyboard to VGA Graphics Pico

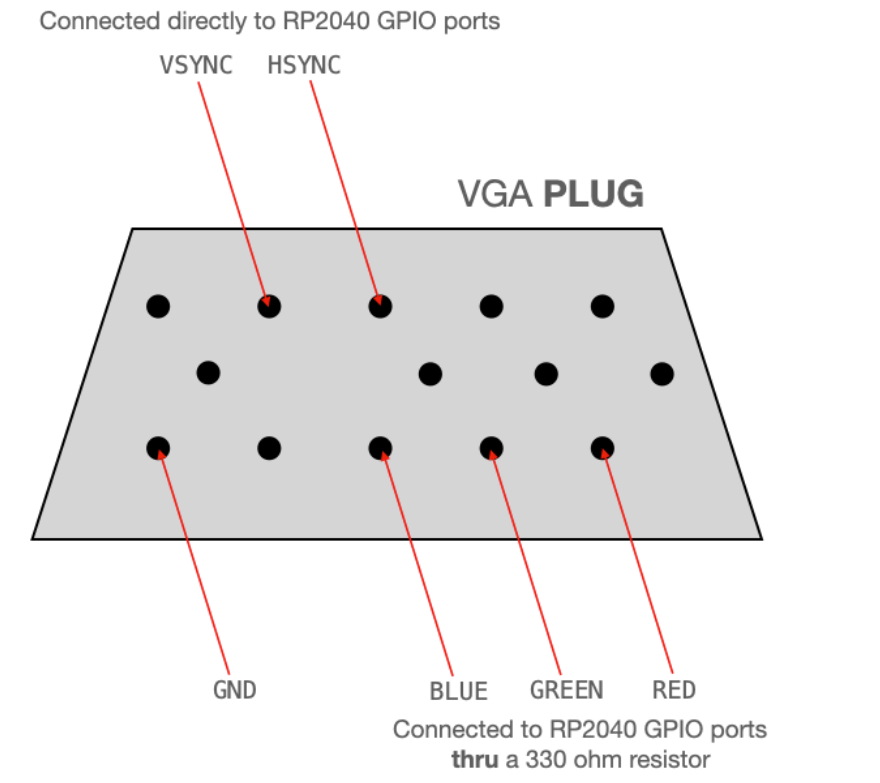

The VGA Graphics Pico hooks up to the VGA Display connector as per the following image:

More information can be found on this page:

VGA Driver for RP2040 – Van Hunter Adams

Software Design

Program Details for Audio Processing Pico

High-level software structure:

The code for this project is divided into four main sections:

1. Global setup section

2. main() function

3. alarm_irq()

4. Helper functions

At the start of the code, we have a section dedicated to including libraries, defining macros, and initializing global variables and arrays. We’re referring to this as the global setup section.

The main() function takes care of initializing the GPIO pins, UART connection, SPI, the ADC, LEDs, and the interrupt timer.

alarm_irq() is an interrupt service routine that handles audio sampling and processing. It is triggered by an alarm that operates at 10 Khz (our sampling frequency of choice) and, during each cycle, it takes an ADC sample, updates different buffers, outputs audio to the DAC, and handles logic used for frequency measurement.

This code makes use of a couple of helper functions: bandpass(), and crossover_LEDs().

bandpass() takes in a frequency value in the form of a float as its parameter and then determines which of the five “bins” a particular frequency corresponds to. It then outputs a number between 0 and 4 accordingly (This is what is sent over UART to the other pico).

crossover_LEDS() is a function that was implemented for debugging purposes during the prototyping phase. We needed a way to display the number of crossovers that the system was counting to understand the frequency that it was measuring. We could not use printf() since it was too computationally expensive to implement in an interrupt service routine so instead we opted to use some LEDs to output the crossover amount measurement in binary. This proved to be a simple effective solution to a tough stumbling block.

alarm_irq():

The global setup section and the main() function are both fairly straightforward as they consist primarily of variable instantiations and setup functions as mentioned in the section above.

The one section of the code worth elaborating on is the alarm_irq function. And its underlying logic. The interrupt service routine operates at 20 KHz to account for the necessary audio sampling rate of our system (refer to Background Math Section for more details). Each cycle it fulfills a couple of functionalities.

For one, a 22,000 element long audio sample buffer is updated by one value during each ISR cycle. This buffer allows for audio samples to be read from the ADC in real time, and then transmitted to the speaker with a 2.2 second delay ( (22,000 samples / 10,000 samples per second) = 2.2 s) through a clever use of indexing. There’s an index for placing a new input ADC sample and an index for the audio sample within the buffer that’s being output to the DAC. The input index is initialized to 0 and the output index is

initialized to one. Each cycle, each index increases in size by one until wrapping around from 21,999 to 0. As such, the buffer has a FIFO functionality. Since the buffer array is initialized to all zeros at the start, this means that the system begins by outputting 0 values (silence) to the DAC for the duration of 2.2 seconds. It then starts outputting the audio data from 2.2 seconds prior continually hence creating a consistent fixed delay. This 2.2 second delay accounts for the delay between the note showing up

at the top of the screen, and the note rolling down to the note receptor.

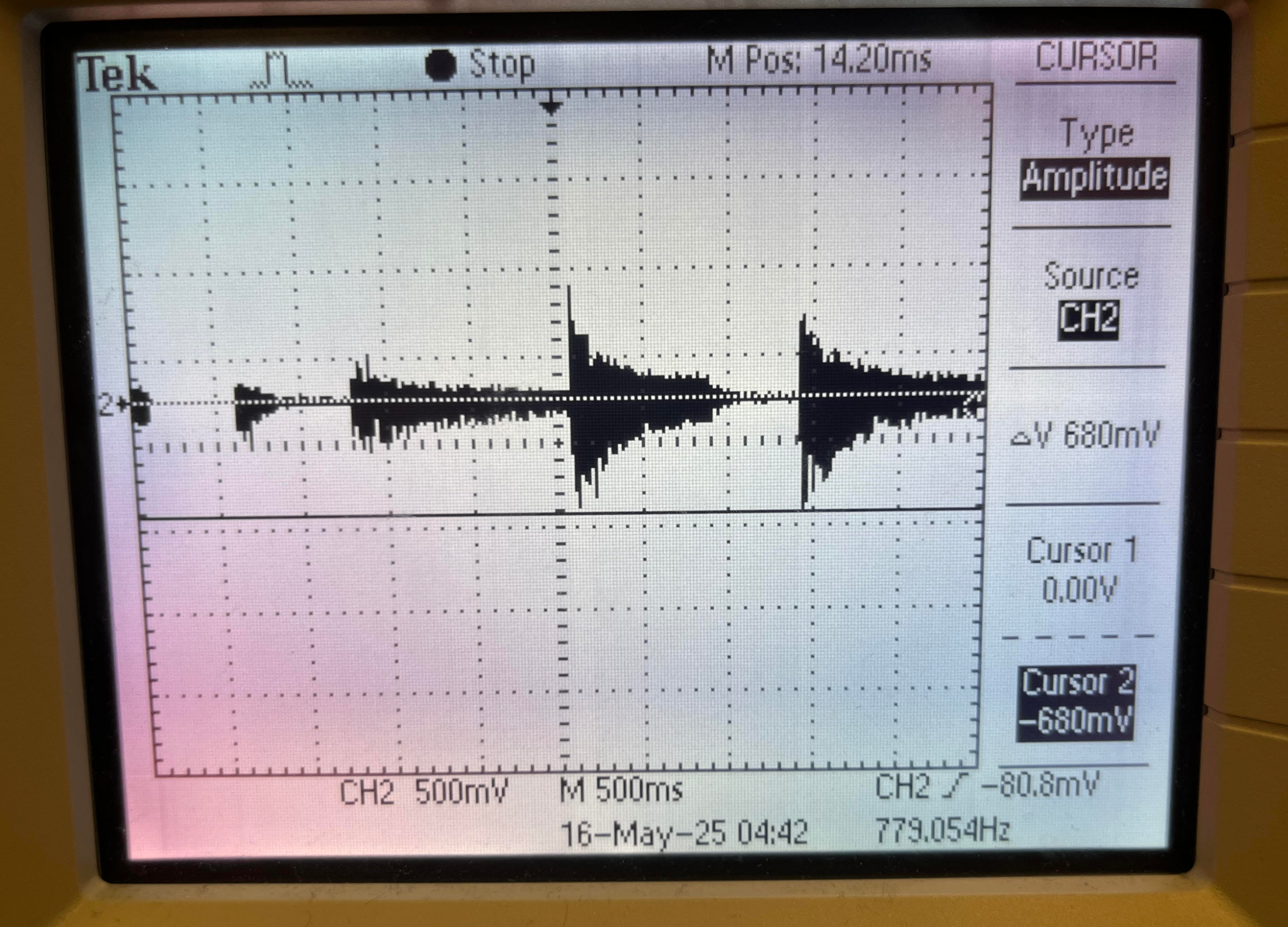

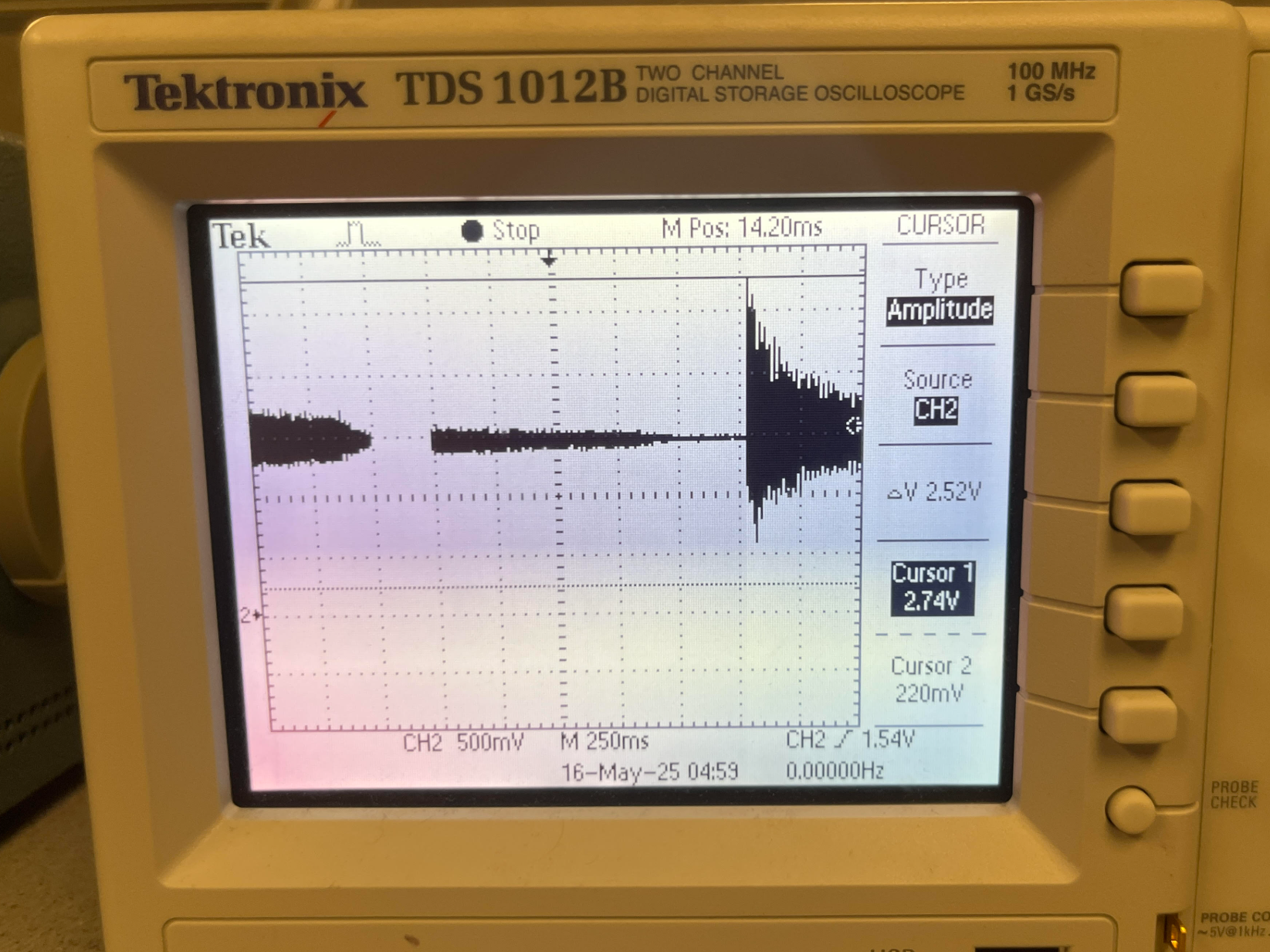

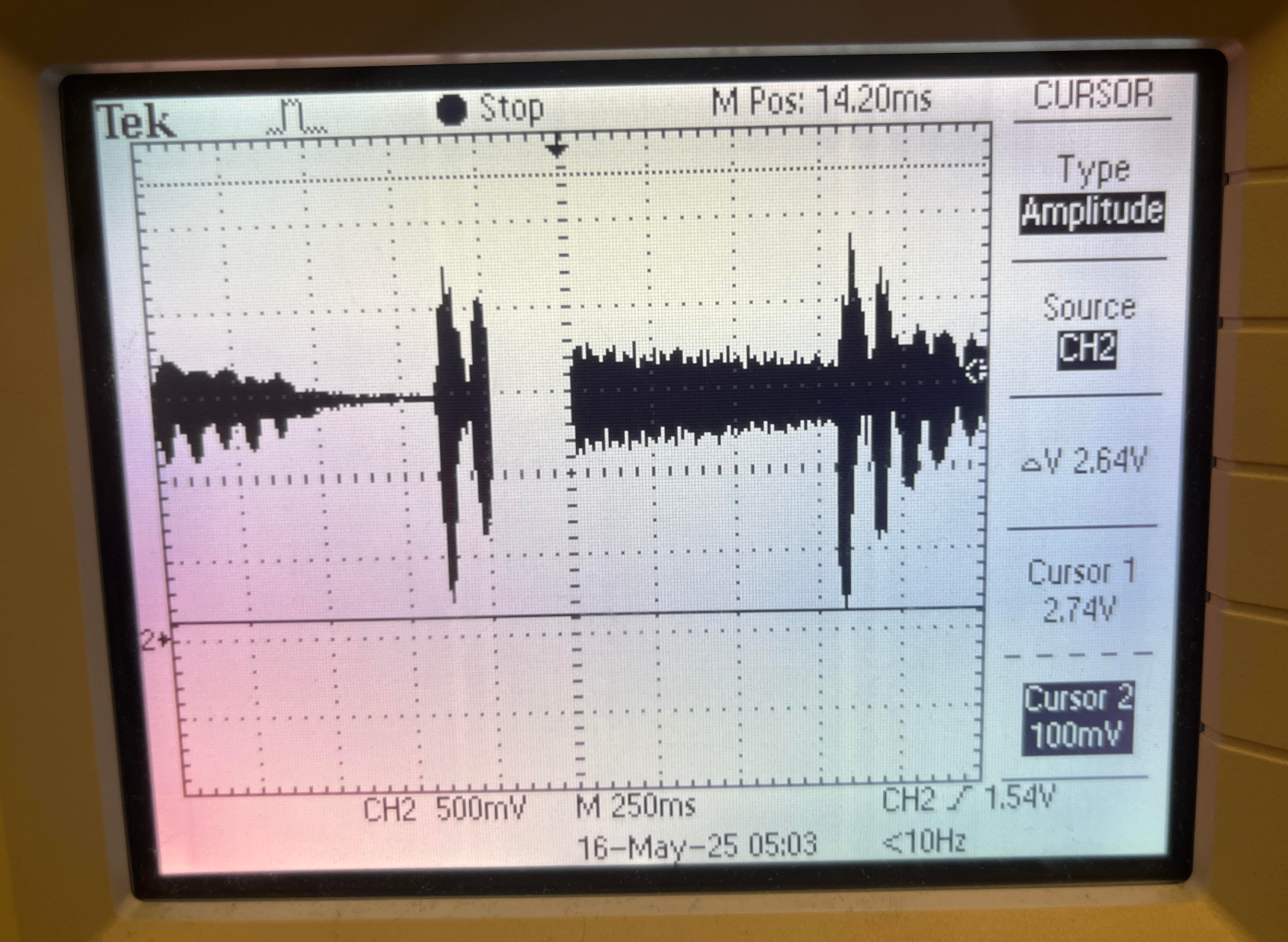

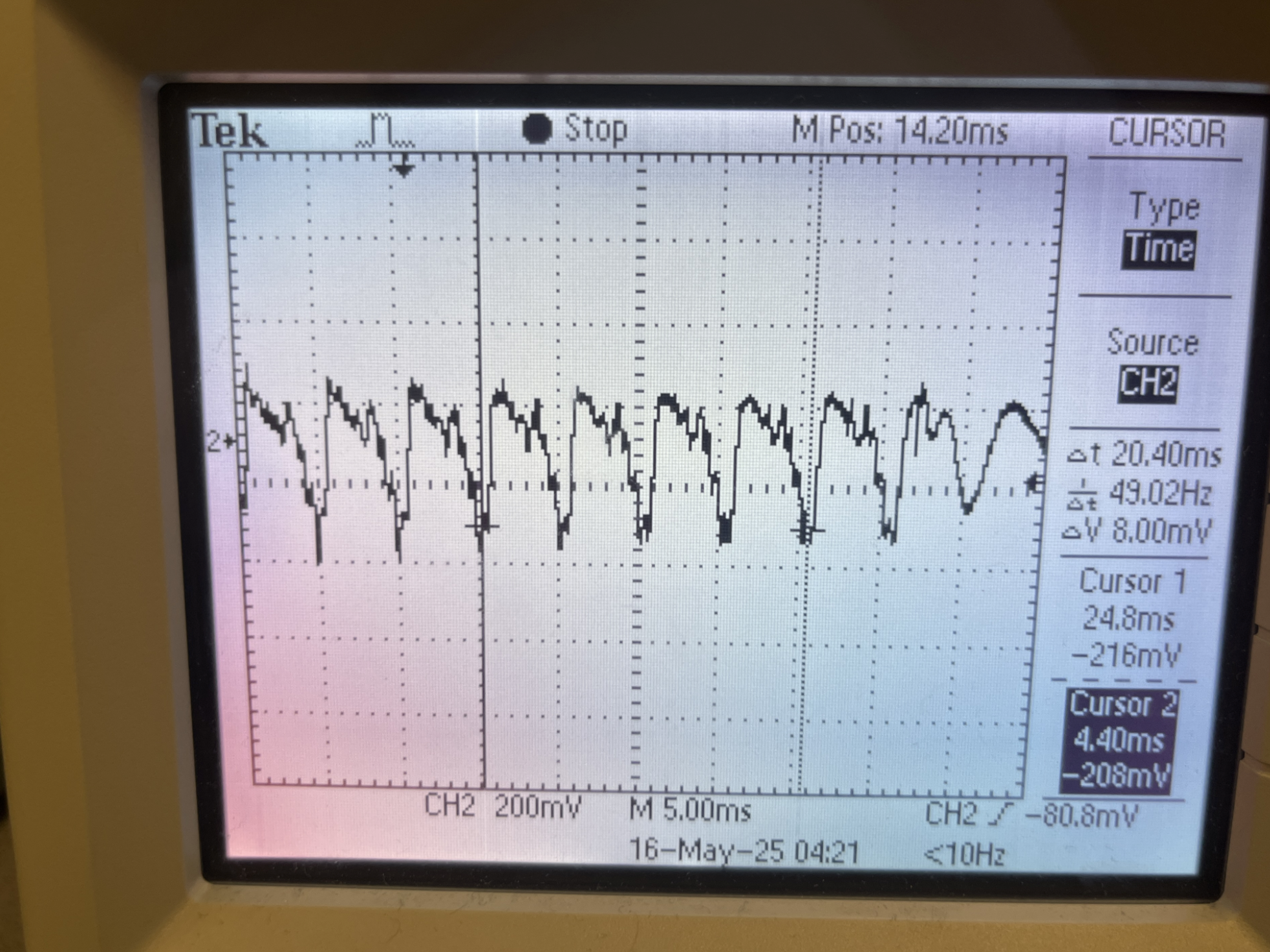

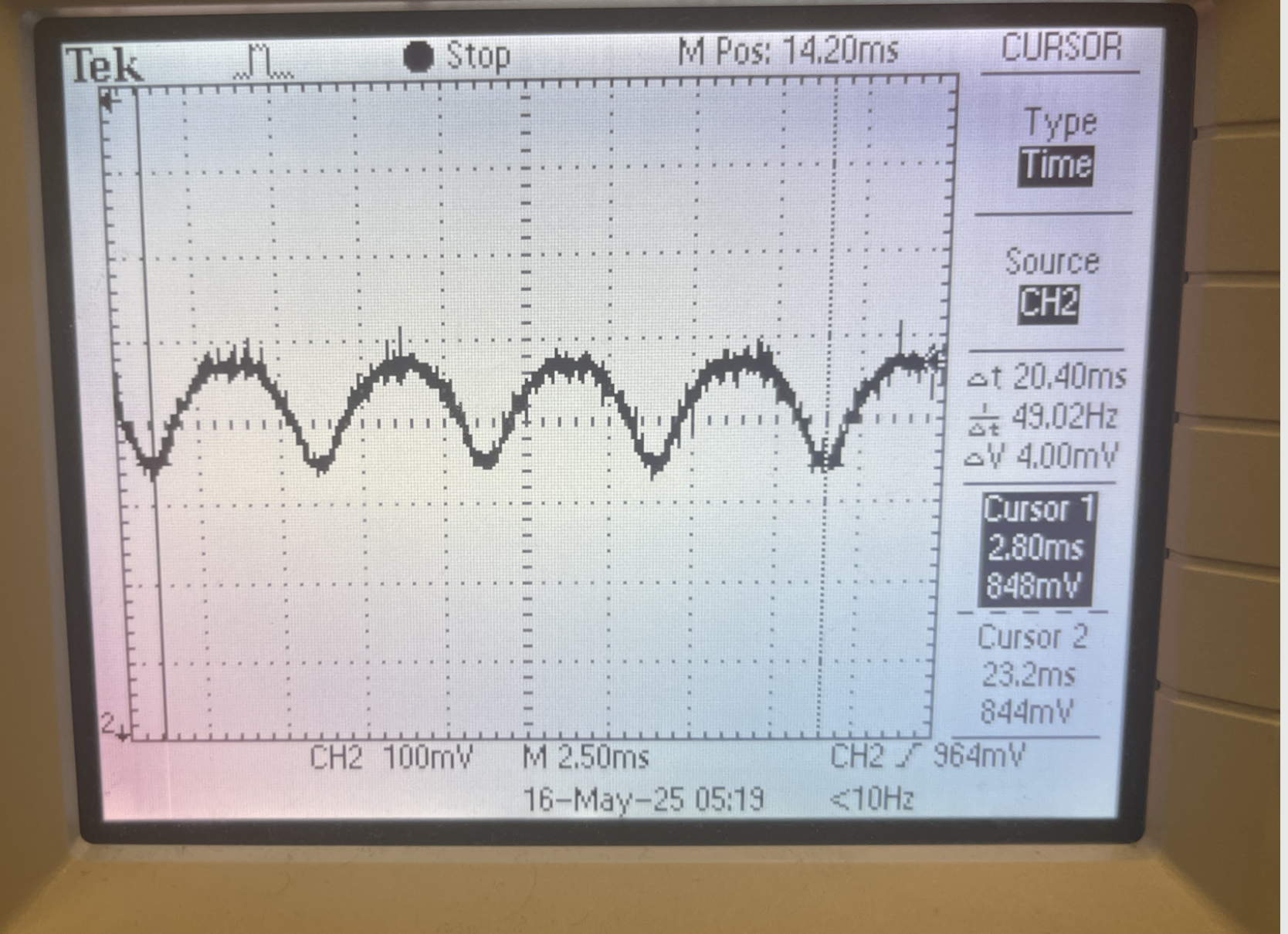

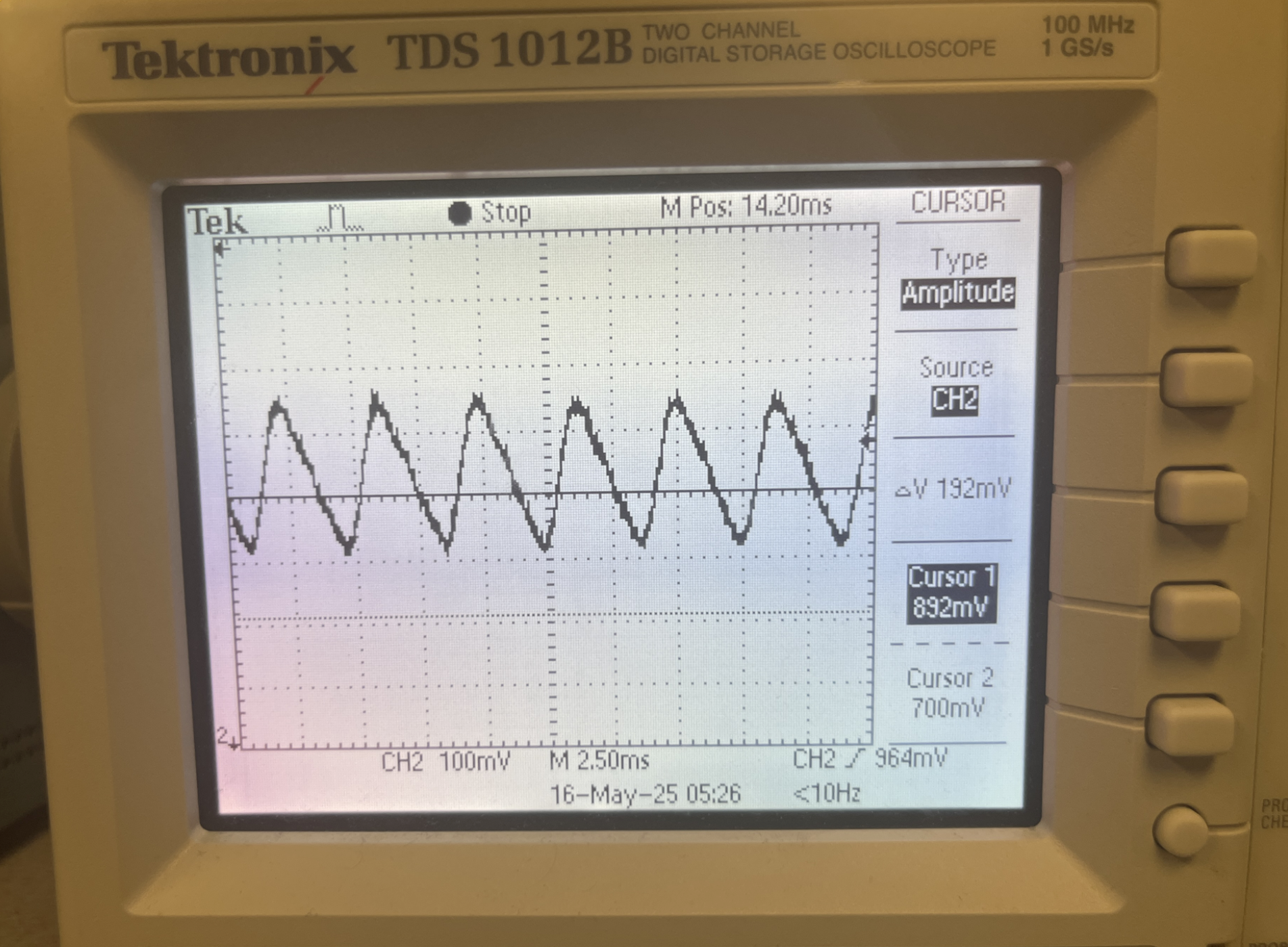

The service routine also note detection logic. Each cycle, the ISR detects whether a new note has been played by the piano audio (allowing for a minimum 50 ms delay in between detections) based on threshold voltage logic. Piano notes, upon first being played, reach their maximum amplitude and then taper off over time. Given this behavior we determined optimal voltage threshold values that are consistently reached at the start of each note, and are not reached again past the 50 ms mark. This ensures that a note is

only detected once each time it’s played. We determined proper threshold values to be 1.65V and 1.23V based on experimental inspection. These are equidistant values above and below the mean value of 1.44V. If either of them are surpassed, and the 50ms condition is not of concern, a new note is detected.

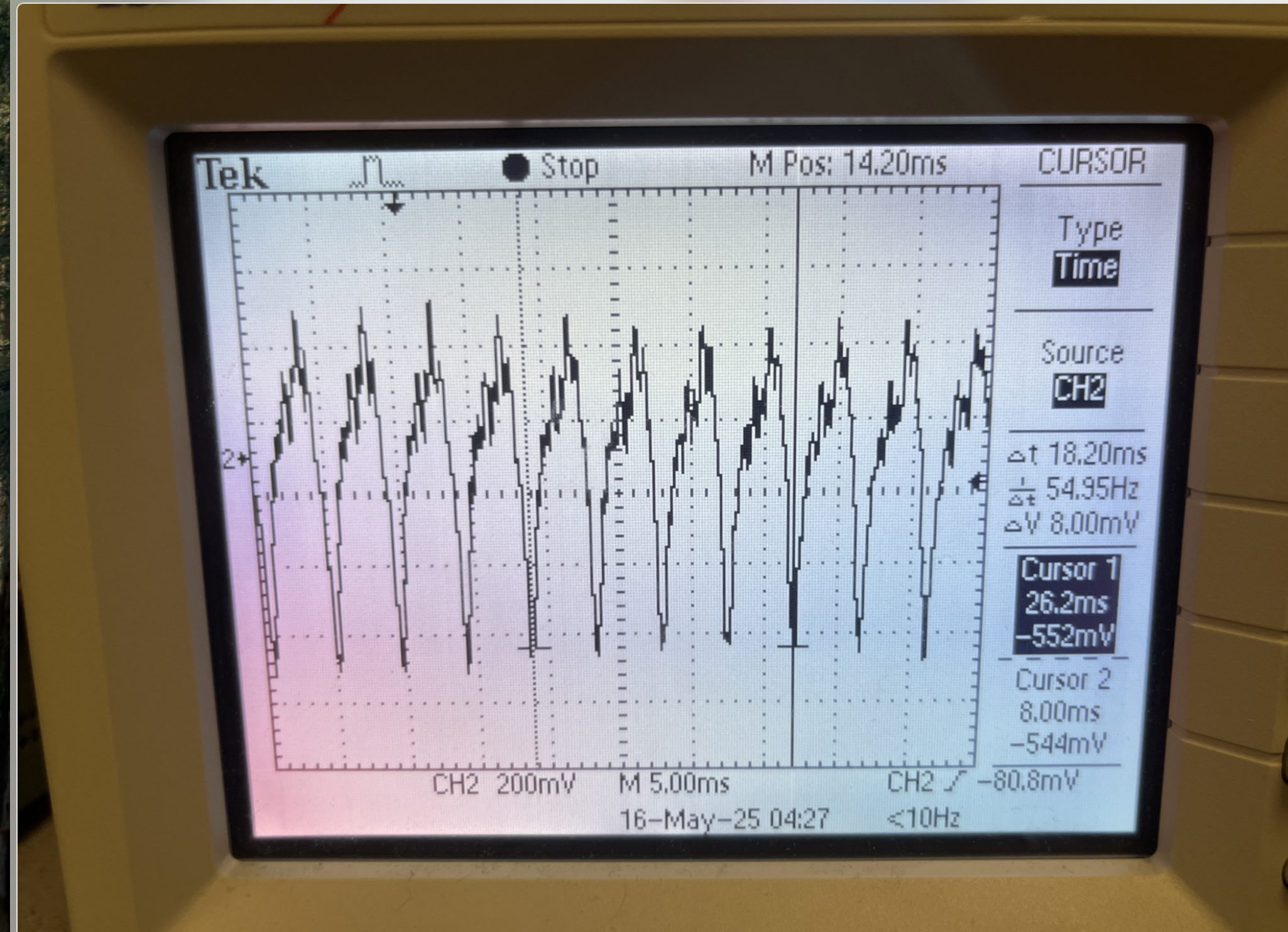

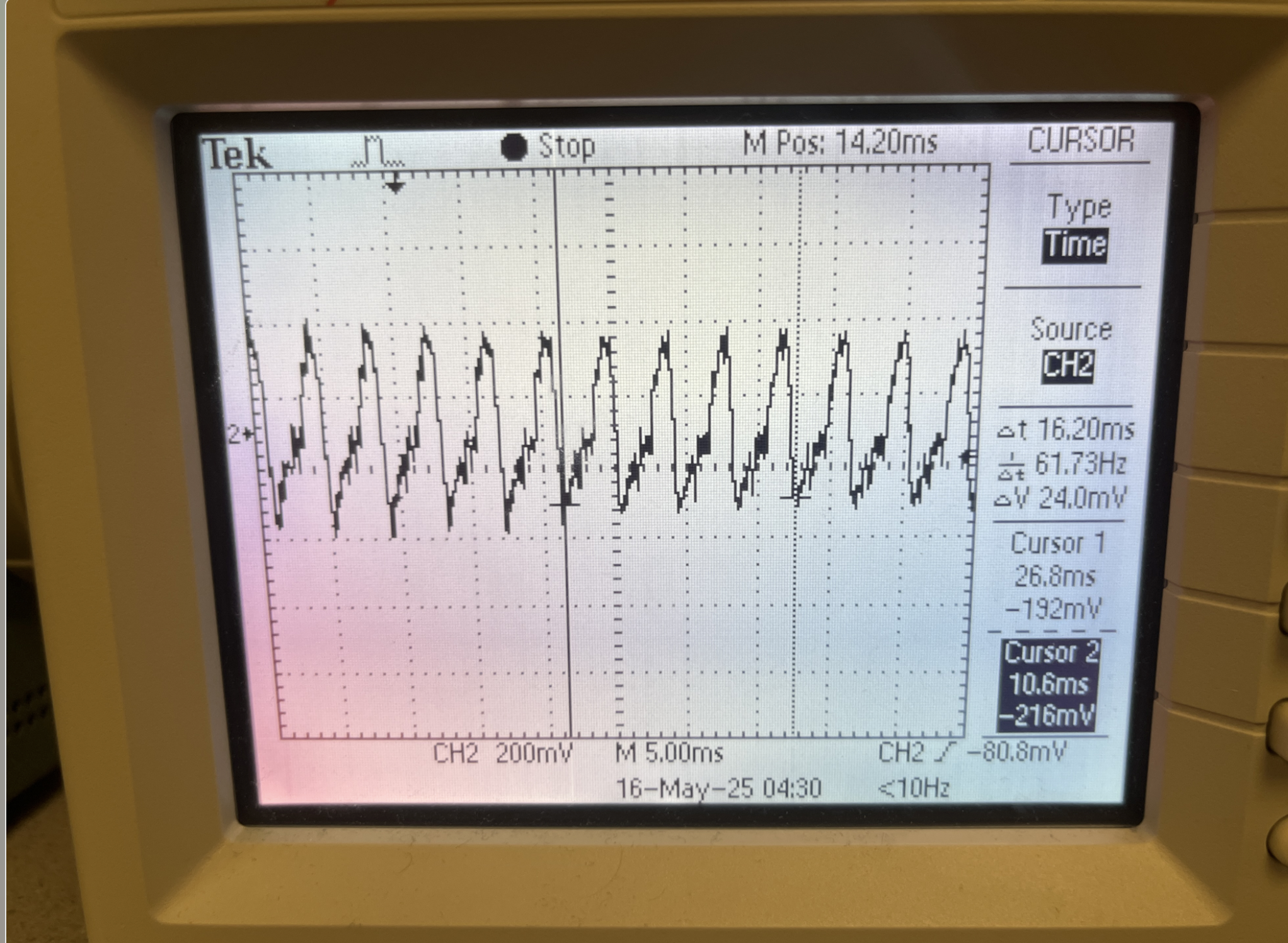

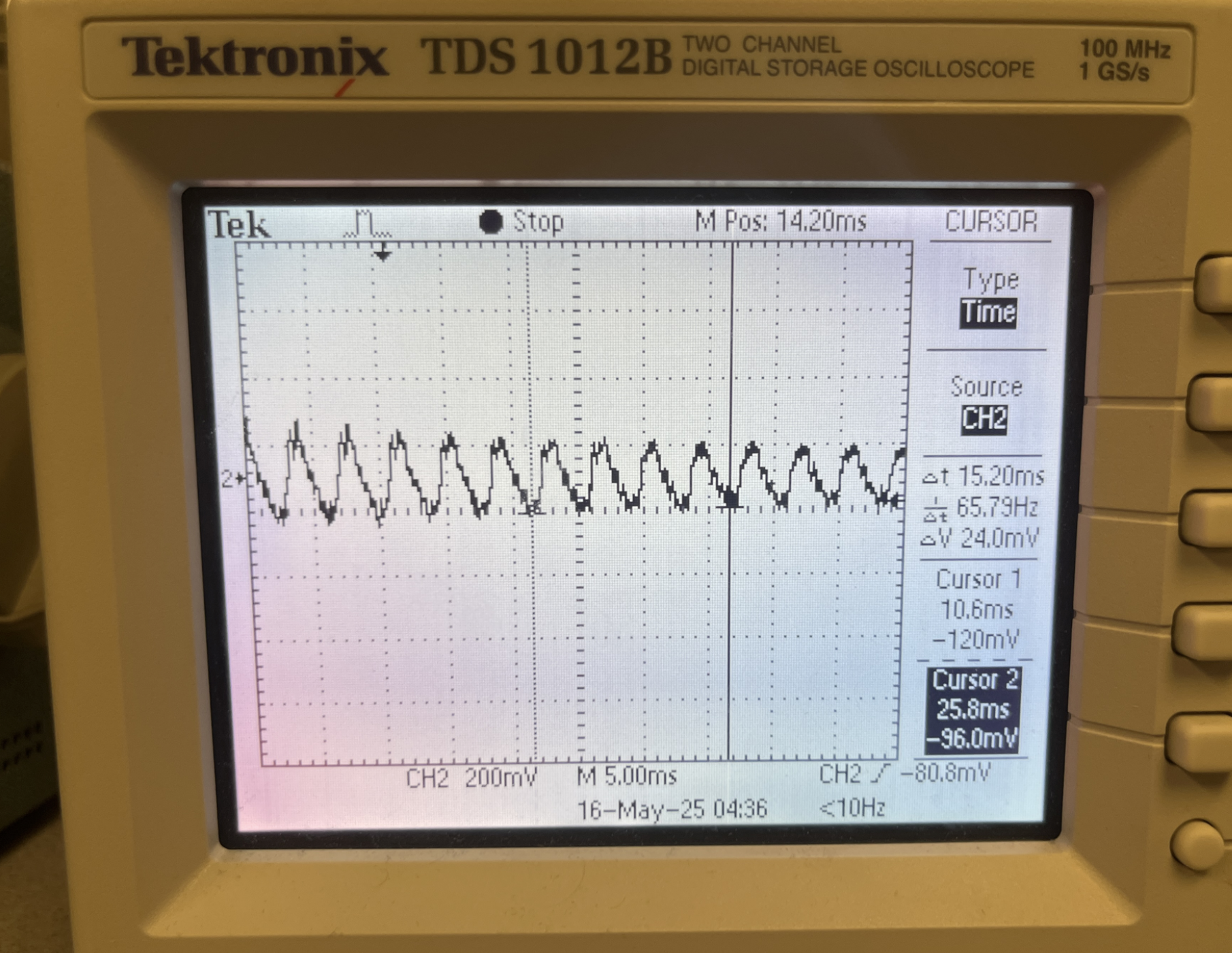

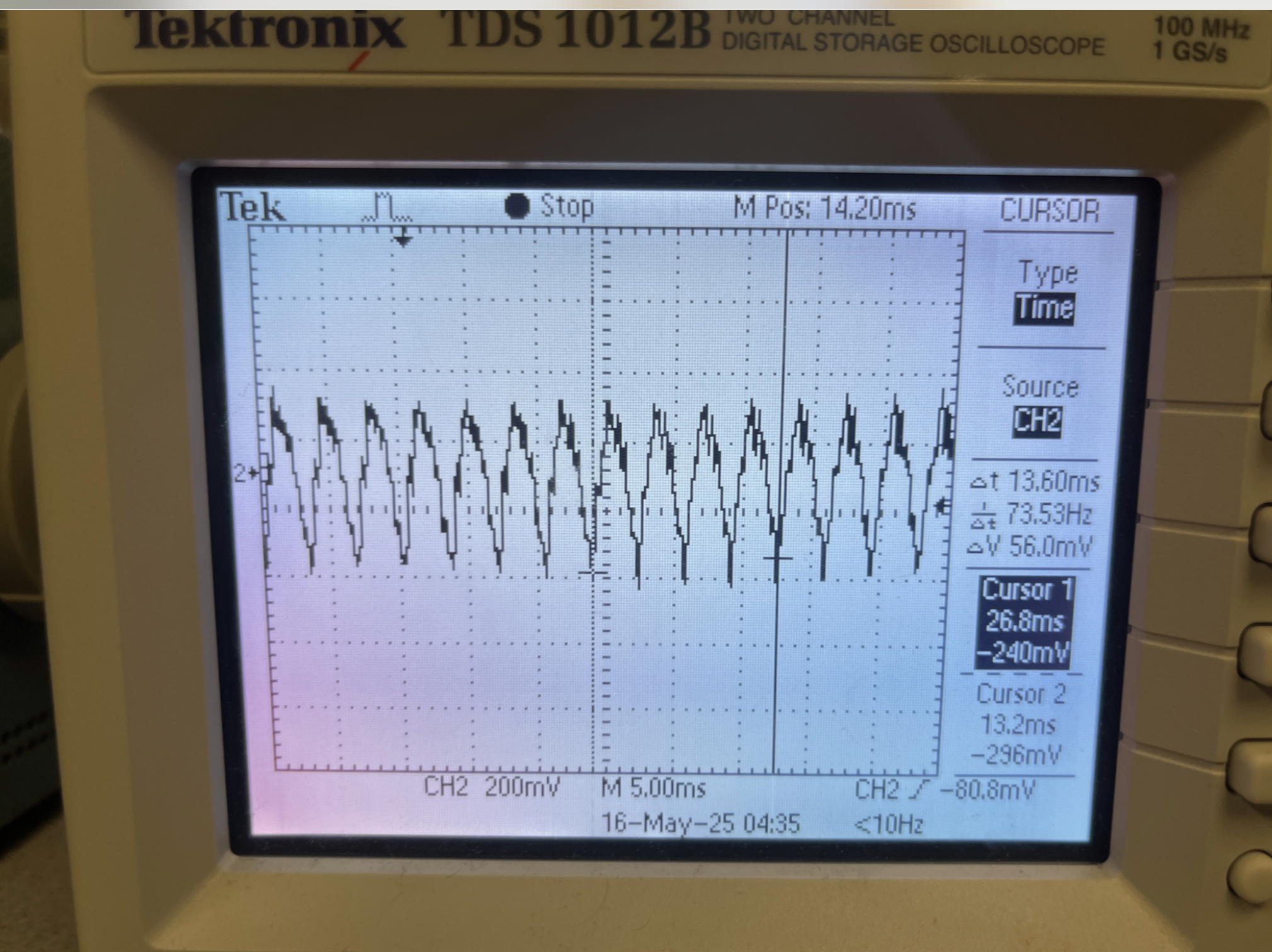

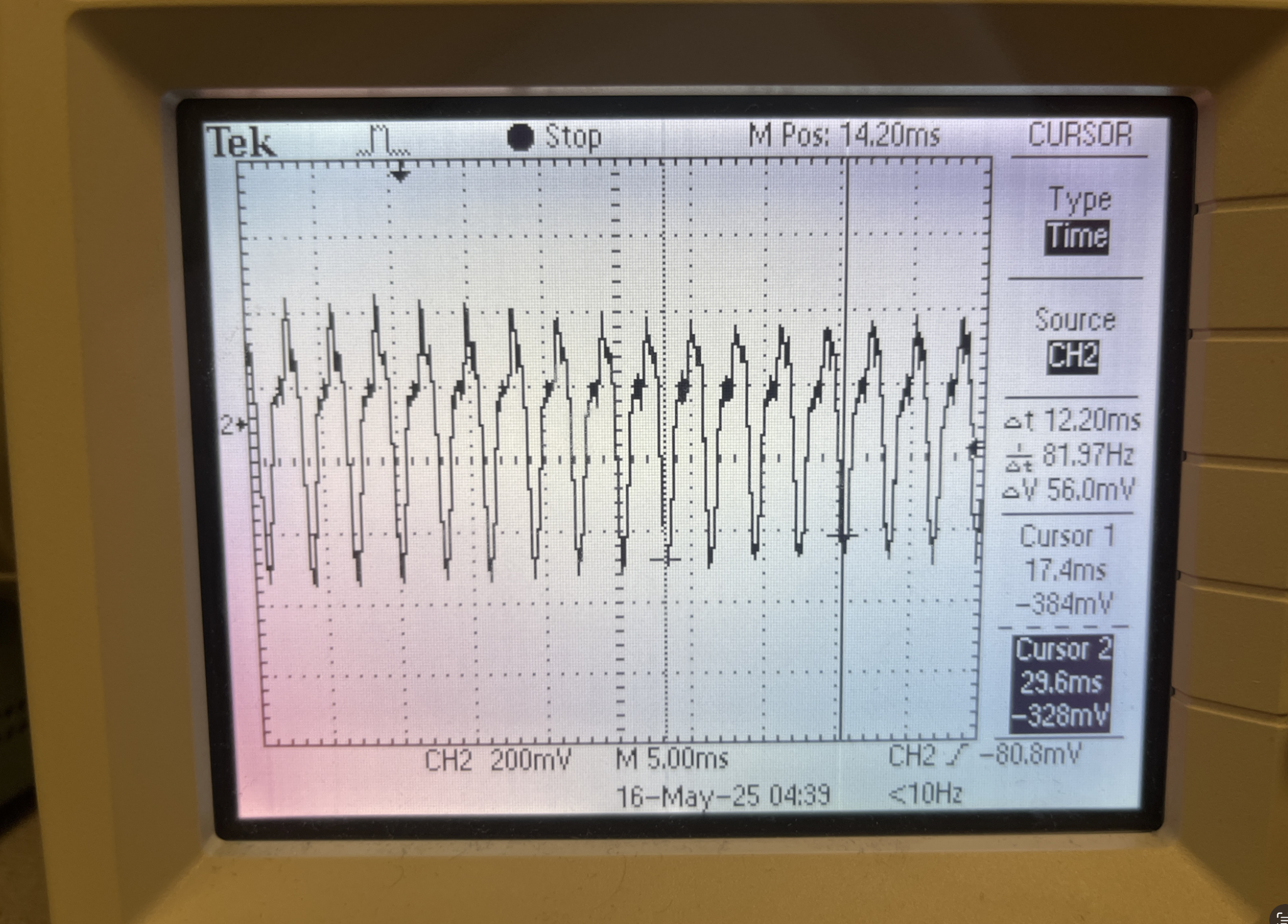

Lastly, the ISR handles frequency detection logic (check Background math section for more information). Each time a new note is played, 4000 sequential low-passed (refer to Background math section for more information) samples are placed in a sample buffer array. Once they are all acquired, the software uses a for-loop to iterate through the array and count the number of jumps that occur from below the mean value to above the mean value of the signal or vice versa. With this, we’re able to attain the number of

crossovers that occur, and, based on this, along with the prescribed sampling rate and array length, are able to calculate the frequency of the signal to a good degree of accuracy.

It is worth mentioning that each time a frequency is measured using the technique above, the frequency measurement is sent through the bandpass() function and the 0-4 return value is sent via UART to the counterpart Pico.

Program Details for VGA Graphics Pico

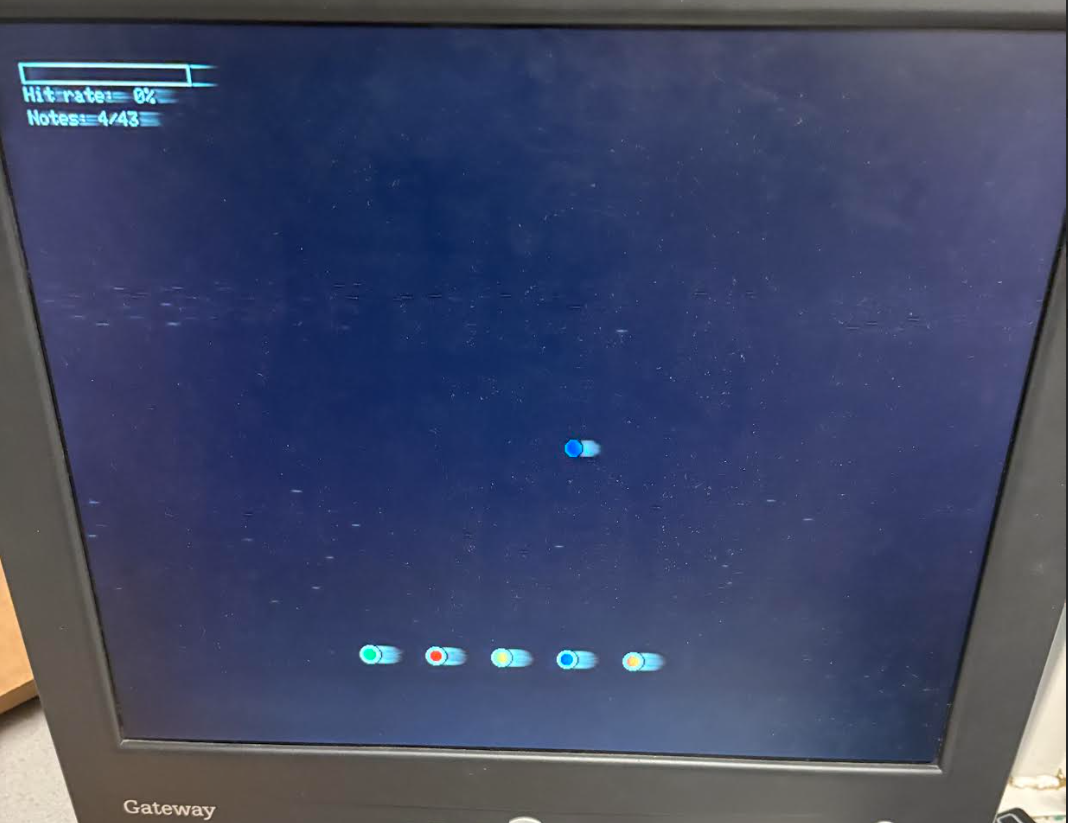

Main System Functions for the Game

main(): The entry point of the program that can initialize all the components like the VGA, UART, and GPIO that can allow for the original game state to be set, animation and GPIO threads can then be added to the scheduler, and starts the program execution.

initUART(): Begins the initialization of the UART communication between our two Picos with it being at 115200 baud rate for the interrupt-driven type reception, which allows for us to have the VGA Pico obtain the note data from our Pico that is being used for the audio processing.

initGPIO(): Allows for our GPIO pins to be initialized for the five physical buttons with there being pull-up resistors, while also establishing the keyboard controller interface that allows for the players of our game to effectively hit the notes on screen.

initVGA(): Initializes our VGA display system for enabling the graphics to render for our 640x480 VGA display that is acting as our screen for the game.

Game Physics Functions

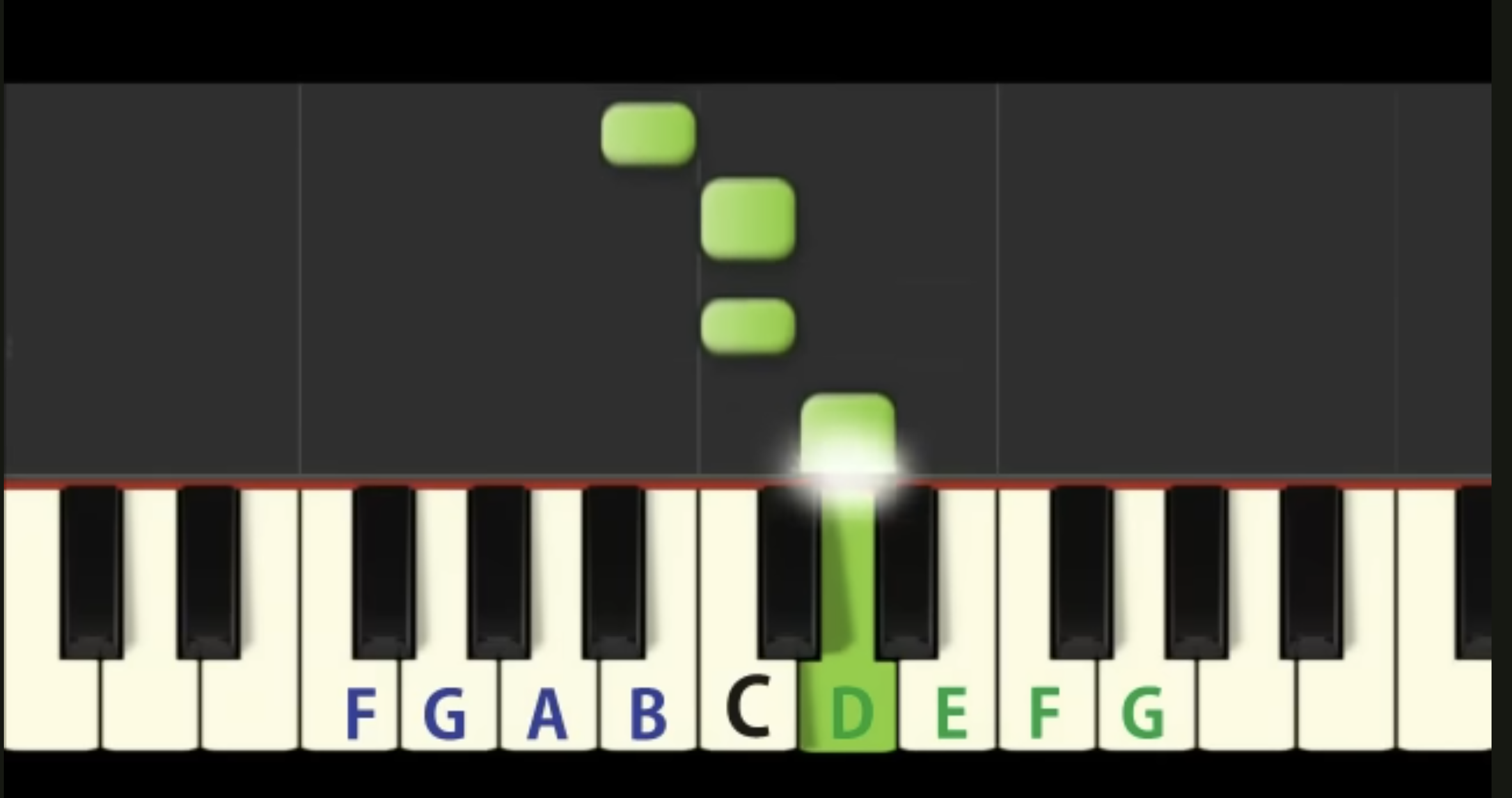

spawnNote(): Creates a new falling note at the top of the screen from either using the UART connection from the audio Pico or randomly generated notes that was done during our testing process, which allowed it to have its position and color based on the frequency determined band from the audio Pico.

updateNotes(): The major animation function that allows us to handle the movement of all the active notes down the screen, while handling the collision physics when buttons are being pushed down, updates the score for when the notes are hit, and allows for the gam over screen to show on the screen once the song is finished.

checkButtonPressed(): Button debouncing logic becomes implemented to make sure that quick or noisy button presses are designed as single and clean inputs.

checkNoteHit(): Sees if the press of the button is aligned with our designed collision area, which is 20 pixels above to 10 pixels below the button line, while registering successful hits and causing the score to update for us as well.

recordHit(): Increments the player's score when a note is successfully hit, while it also updates the percentage display, while also describes there being a confirmation of hit.

Interface Functions

drawButtons(): Draws the five colored buttons at the bottom of the screen that go with the five note frequencies areas for us where the falling notes will move down toward.

highlightButton(): Provides visual success when a button is hit at the moment a note reaches the button at the bottom while also outlining the button with a green outline 300ms for allowing ther player to be provided with immediate feedback.

restoreButton(): Resets a button's appearance back to being back to the original appearance once the button is finished outlining in green.

updateScore(): Keeps the updating of the score display when a note is hit or missed, including a visual progress bar that fills based on player performance.

drawGameOverScreen(): Shows the player the final score and hit percentage when all notes have beee finished for a song, while showing "GAME OVER!" and showing that any of the colored buttons can be pressed to restart the game.

resetGame(): All the game variables become reinitialized, with the screen clearing, and new game becomes prepared.

Thread Management Functions

protothread_gpio(): A thread that keeps monitoring the physical button states, handles the button presses for game mechanics, and accounts for the game state transitions like restart and the start of the game.

protothread_anim(): The main animation thread that keeps the frame rate timing, updates our note positions, manages button highlighting for us as well, and makes sure that there keeps being the necessary visual performance while being operated at 30fps.

UART Communication Functions

on_uart_rx():The interrupt handler that is able to take in the incoming UART data from the audio Pico, while taking in the note frequency information from the button index of 0-4 when a new note ends up becoming detected.

*For reference - our code can be referred to in Appendix B